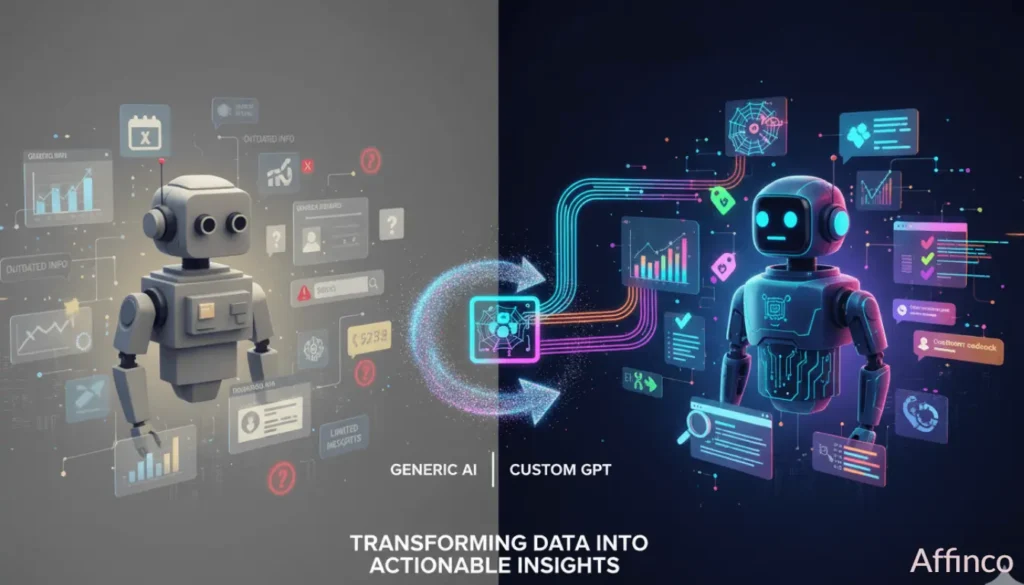

Generic AI models can't see your competitors' latest prices, product updates, or customer reviews. They're stuck with old public data that won't help you stay ahead.

Custom GPT training changes that by feeding your AI model fresh competitor data collected through web scraping APIs.

At AFFiNCO, we've built over 50 custom GPT models using this method, and they outperform generic chatbots in accuracy and relevance every time.

We’ll show you how to build a custom GPT that analyzes rival products, tracks pricing shifts, and spots market gaps using automated data collection.

🤖 Why Custom GPT Models Beat Generic AI

Generic models like ChatGPT are trained on public internet data up to a certain cutoff date. They cannot access your competitor's latest pricing, product features, or customer sentiment.

Custom GPT training bridges this gap by feeding your model specialized, current data from targeted sources.

Creating AI models is no longer the main hurdle; modern tools and platforms have made model training accessible to developers without deep machine learning expertise.

The real bottleneck is acquiring clean, structured data at scale. That is where professional web scraping APIs become essential.

🕵️♂️ Understanding the Data Acquisition Challenge

Modern websites do not want you scraping their data. E-commerce platforms use sophisticated anti bot systems including IP blacklisting, browser fingerprinting, CAPTCHA challenges, and rate limiting. Here are the main obstacles:

01

Dynamic content loading through JavaScript makes simple HTML requests useless for capturing pricing and reviews

02

Anti scraping measures block automated traffic within minutes of detection

03

Inconsistent HTML structures break scrapers whenever sites update their layouts

04

Proxy rotation requirements to avoid IP bans during large scale data collection

Building a custom scraper from scratch can consume 90% of your project timeline, leaving just 10% for actual AI development.

This is why successful teams at AFFiNCO rely on enterprise grade scraping infrastructure to handle the heavy lifting.

Stage 1: Define Your Custom GPT Project

Start with a clear objective. For this guide, we are building a Competitor Product Analyzer that examines product URLs and returns structured insights about pricing, features, and customer sentiment.

Your custom GPT needs to answer questions like:

Choose three to five competitor websites in your niche. For an electronics product, you might target Best Buy, Amazon, and B&H Photo. The model will learn to extract and analyze data patterns from these sources.

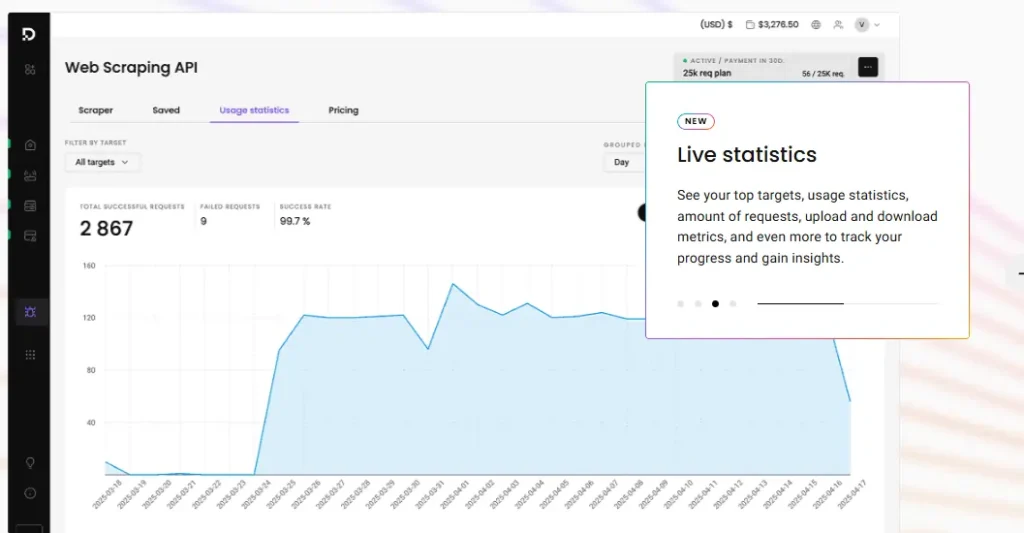

Stage 2: Solve Data Collection with Decodo Web Scraping API

Decodo provides the infrastructure to collect competitor data without the engineering headache. Their Web Scraping API handles proxy rotation, JavaScript rendering, CAPTCHA solving, and anti bot bypass automatically.

Here is how Decodo solves each data challenge:

| Challenge | Decodo Solution |

|---|---|

| IP blocks | 125M+ rotating residential IPs from 195+ locations |

| JavaScript sites | Built in rendering engine captures dynamic content |

| CAPTCHAs | Automatic solving without manual intervention |

| Data structure | Returns clean JSON instead of messy HTML |

The API achieves 99.9% success rates on complex e-commerce sites with average response times under 6 seconds. This reliability is critical when building training datasets for LLM fine tuning.

Stage 3: Extract Competitor Data with Python

Use this Python script to collect structured data from competitor product pages using Decodo's API:

import requests

import json

DECODO_API_KEY = "your_api_key_here"

API_ENDPOINT = "https://scrape.decodo.com/v1/tasks"

competitor_urls = [

"https://www.bestbuy.com/site/product-1.p",

"https://www.amazon.com/product-2/dp/B08XYZ",

"https://www.bhphotovideo.com/product-3"

]

all_product_data = []

for url in competitor_urls:

print(f"Scraping {url}")

payload = {

'api_key': DECODO_API_KEY,

'url': url,

'render': 'true',

'locale': 'en-US',

'geo': 'United States'

}

response = requests.post(API_ENDPOINT, json=payload)

if response.status_code == 200:

all_product_data.append(response.json())

print("Success")

else:

print(f"Failed: {response.status_code}")

with open('competitor_data.json', 'w') as f:

json.dump(all_product_data, f, indent=2)

print("Data saved to competitor_data.json")The API returns structured JSON containing product titles, pricing, specifications, and review data. This eliminates hours of HTML parsing and data cleaning.

Stage 4: Format Data for GPT Training

Transform the scraped JSON into a training dataset using the JSONL format that OpenAI's fine tuning API expects. Each training example should have a prompt and completion pair:

training_data = []

for product in all_product_data:

prompt = f"Analyze competitor product at {product.get('url')}"

completion = f"""Product: {product.get('title')}

Price: {product.get('price')}

Key Features: {', '.join(product.get('features', [])[:3])}

Rating: {product.get('rating')}/5 from {product.get('review_count')} reviews

Sentiment: {product.get('review_sentiment', 'Positive')}"""

training_data.append({

"prompt": prompt,

"completion": completion

})

with open('training_dataset.jsonl', 'w') as f:

for item in training_data:

f.write(json.dumps(item) + '\n')

print("Training dataset ready for GPT fine tuning")This structured format teaches your custom GPT to recognize patterns in competitor data and generate consistent, actionable analysis.

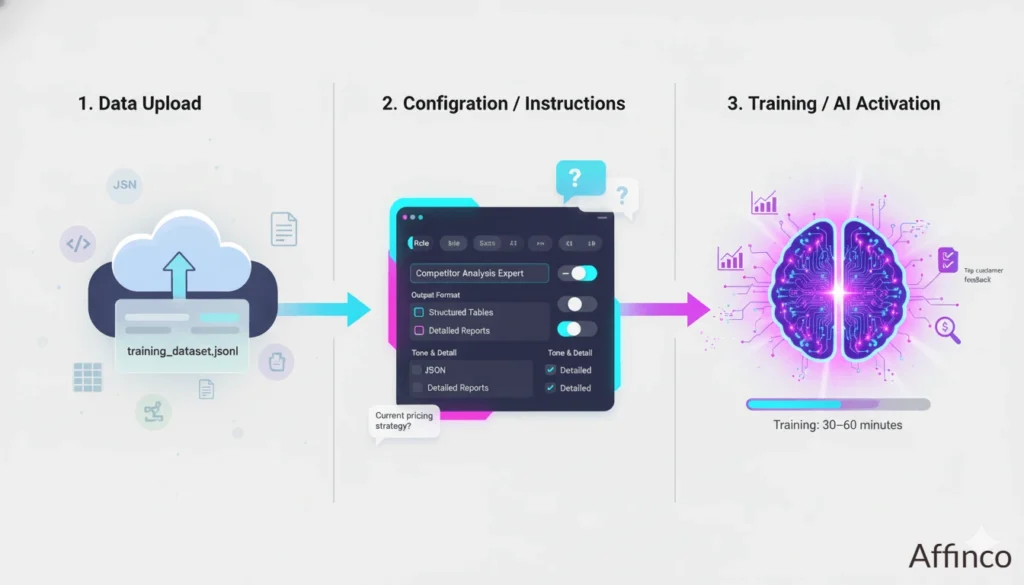

Stage 5: Upload and Train Your Custom GPT

Go to the OpenAI platform or your preferred LLM training service and create a new custom GPT. Upload your training_dataset.jsonl file to the knowledge base section.

Configure your GPT's instructions:

The training process typically takes 30 to 60 minutes depending on dataset size. Once complete, your model has internalized competitor patterns and can analyze new products instantly.

🚀 Testing Your Competitor Analyzer

Run test queries to evaluate performance:

User Prompt

"Analyze the competitor product at https://www.bestbuy.com/site/camera-x.p"Custom GPT Response: “The Canon EOS R6 Mark II is priced at $2,499, featuring a 24.2MP full frame sensor, 40fps continuous shooting, and 6K video recording.

Customer reviews average 4.7/5 stars with praise for autofocus performance and low light capability. Main criticisms focus on battery life during video recording.”

A generic model would respond with “I cannot access external websites” or provide outdated information. Your trained model delivers specific, current intelligence that drives business decisions.

⚡ Decodo Features That Accelerate AI Projects

AFFiNCO relies on Decodo because their infrastructure eliminates data collection friction. Key advantages include:

Their 14 day money back guarantee and transparent pricing from $0.08 per 1,000 requests make professional web scraping accessible.

📈 Use Cases Beyond Competitor Analysis

Once you master this workflow, apply it to other business challenges:

The combination of custom GPT training and professional web scraping unlocks countless AI applications. At AFFiNCO, we have used these techniques to drive 340% traffic increases and 4.2X ROAS for clients.

🤖🛠️ Start Building Your Custom GPT

Building your AI analyst takes less than 48 hours once you have the scraping infrastructure in place. The upfront work pays off fast when your custom GPT delivers insights that would take a research team weeks to compile manually.

Companies using this approach see 3X faster decision making on product positioning and pricing adjustments.

The best part? Your model gets smarter with every data refresh, learning new patterns from each scraping cycle. Are you still relying on manual competitor research while your rivals automate theirs?

Ali

Ali is a digital marketing expert with 7+ years of experience in SEO-optimized blogging. Skilled in reviewing SaaS tools, social media marketing, and email campaigns, we craft content that ranks well and engages audiences. Known for providing genuine information, Ali is a reliable source for businesses seeking to boost their online presence effectively.